The code provides Yes/No prediction, fitting binary bootstrap sample for mistakes in data entries. Leo Breiman was (1928 – 2005) statistician, University of California, Berkeley. Bridge between statistics and computer science, in machine learning. E.g. classification and regression trees, ensembles of trees, bootstrap samples random forests, .... Bootstrap aggregation is called bagging by Breiman.

category_encoders - set of scikit-learn-style transformers for encoding categorical variables into numeric with different techniques.

ce.CountEncoder()

https://contrib.scikit-learn.org/category_encoders/index.html

sklearn.model_selection - Model_selection method for setting a blueprint to analyze data and then using it to measure new data. Selecting a proper model allows to generate accurate results when making a prediction. To do that, you need to train your model by using a specific dataset.

sklearn.ensemble - The sklearn.ensemble module includes two averaging algorithms based on randomized decision trees: the RandomForest algorithm and the Extra-Trees method.

https://scikit-learn.org/stable/modules/ensemble.html

roc_auc_score - Compute Area Under the Receiver Operating Characteristic Curve (ROC AUC) from prediction scores.

https://scikit-learn.org/stable/modules/generated/sklearn.metrics.roc_auc_score.html

rfc = RandomForestClassifier() - A random forest is a meta estimator that fits a number of decision tree classifiers on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting.

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

Receiver Operating Characteristic Curve - A receiver operating characteristic curve, or ROC curve, is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. The Area Under the Curve (AUC) is the measure of the ability of a classifier to distinguish between classes and is used as a summary of the ROC curve. The higher the AUC, the better the performance of the model at distinguishing between the positive and negative classes.

# Count mistaken entries - import Y/N data file with mistakes in Job Web Advertisements, information if there is or is not a mistake in online advertisement is in column called publishers as fraudulent

from google.colab import drive

drive.mount('/content/gdrive’)

import pandas as pd

df=pd.read_csv('gdrive/MyDrive/Dataset_for_Colab/fake_job_postings.csv')

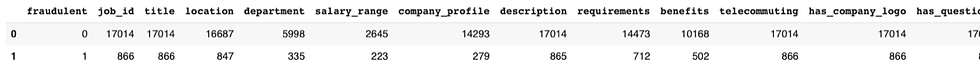

df

# Count mistaken entries

count_mistakes = df.groupby('fraudulent').count()

count_mistakes.reset_index(inplace=True)

count_mistakes

# 4.8% of data has 1 in column fraudulent

# Plot counts

import plotly.express as px

fig = px.bar(count_mistakes, x='fraudulent', y='job_id',labels={'job_id': 'count'})

fig.show()

# Plot counts by top 10 job titles

fig = px.bar(count_mistakes.iloc[:10],

x='title', y='fraudulent',

labels={'fraudulent': 'count'})

fig.show()

# Extract into boolean columns job titles that contain 'home' or 'remote'

df['home'] = df['title'].str.contains('home')

df['remote'] = df['title'].str.contains('remote’)

# Check how many titles have 'home' or ‘remote'

print(df['home'].value_counts())

print(df['remote'].value_counts())

print(df['telecommuting'].value_counts())

False 17875

True 5

Name: home, dtype: int64

False 17851

True 29

Name: remote, dtype: int64

0 17113

1 767

Name: telecommuting, dtype: int64

!pip install category_encoders

import category_encoders as ce

count_enc = ce.CountEncoder()

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import roc_auc_score

df

df2 = df.drop(columns='fraudulent')

df2

# Split into train and test datasets

X_train, X_test, y_train, y_test = train_test_split(df2,df['fraudulent'],test_size=0.3,random_state=12)

rfc = RandomForestClassifier()

rfc.fit(X_train, y_train)

predictions = rfr.predict(X_test)

score = roc_auc_score(y_test, predictions)

print('Score: {}'.format(score))

From this model, we get a score of 94% for area under the ROC curve. Very good prediction.

Comments